Abby McCloskey: Too many kids already know someone who's been deepfaked

Published in Op Eds

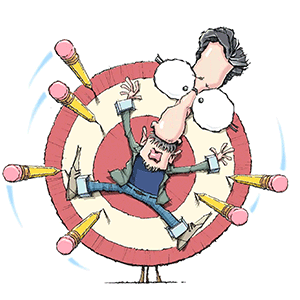

The pre-AI world is gone. Estimates suggest that already, as many as one in eight kids personally knows someone who has been the target of a deepfake photo or video, with numbers rising to one in four who have seen a sexualized deepfake of someone they recognize, either a friend or a celebrity. This is a real problem, and it’s one that lawmakers are suddenly waking up to.

In the 1980s, when I was a kid, it was a picture of a missing child on a milk carton from across the country that encapsulated parental fears. In 2026, it’s an AI-generated suggestive image of a loved one.

The increasing availability of AI nudification tools, such as those associated with Grok, has fueled skyrocketing reports of AI-generated child sexual abuse material — from roughly 4,700 in 2023 to over 440,000 in the first half of 2025 alone, according to the National Center on Missing and Exploited Children.

This is horrific, grimy stuff. It’s particularly difficult to read about — and write about — as a mom, because the ability to shield your child from it feels so beyond your control. Parents already struggle just to keep kids off social media, get screens out of classrooms or lock up household devices at night. And that’s after a decade’s worth of data on social media’s impact on kids.

Before we’ve even solved that problem, AI is taking the world by storm — especially among the young. Nearly half (42%) of American teens report talking to AI chatbots as a friend or companion. The vast majority of students (86%) report using AI during the school year, according to Education Week. Even kids ages 5 to 12 are using generative AI. In several high-profile cases, parents say AI chatbots encouraged their teens to commit suicide.

Too many parents are out of the loop. Polling from Common Sense Media shows that parents consistently underestimate their children’s use of AI. Schools, too. The same survey found that few schools had communicated — or arguably even developed — an AI policy.

But there’s a shared sense of foreboding: Americans remain far more concerned (50%) than excited (10%) about the increased use of AI in daily life, and the vast majority believe that they have little to no ability to control it (87%).

Policymakers are on the move. On Tuesday, the Senate unanimously passed a bill, the Defiance Act, to allow victims of deepfake porn to sue the people who created the images. The UK and EU are investigating whether Grok was used to generate sexually explicit deepfake images of women and children without their consent, violating their Online Safety Act.

In the U.S., the Take It Down Act, signed into law by Congress last year, criminalized sexual deepfakes and requires platforms to remove the images within 48 hours; sharers could face prison time.

In my home state of Texas, we have some of the most aggressive AI laws in the country. The Securing Children Online through Parental Empowerment (SCOPE) Act of 2024, among other things, requires platforms to implement a strategy to prevent minors from being exposed to "harmful material.” It’s been illegal since Sept. 1, 2025 to create or distribute any sexually suggestive images without consent. Punishments range from felony charges and imprisonment to recurring fines. And starting this year, the Texas Responsible AI Governance Act (TRAIGA) goes into effect banning AI development with the sole intent to create deepfakes.

Texas might not be known for its bipartisanship, but these efforts have been pushed in a bipartisan manner and framed (correctly) as protecting Texas children and parental rights. “In today’s digital age, we must continue to fight to protect Texas kids from deceptive and exploitative technology,” said Attorney General Ken Paxton, announcing his investigation into Meta AI studio and Character.AI.

But we don’t know yet if these laws will be effective. For one, it’s all still so new. For another, the technology keeps changing.

And it doesn’t help that the creators of AI are tight with Washington. Big tech companies are the big boys in DC these days; their lobbying has grown significantly. Closer to home, Texas Democrats are concerned that Paxton might not push Musk over the Grok debacle given the billionaire’s thick GOP connections.

Under the Trump Administration, the Federal Trade Commission launched a formal inquiry into Big Tech, asking them to detail how they test and monitor for potential negative impacts of chatbots on kids. But that’s essentially self-disclosing; these same companies haven’t exactly inspired confidence on that score with social media, or in the case of Grok, in deepfake child nudes.

More outside accountability is required. To that end, a multi-prong approach is required. I’d like to see Health and Human Services incorporate AI’s challenge to kids’ well-being as part of the MAHA movement. A bipartisan commission could explore AI age limits, school policies and children’s relational skills. (Concerningly, there was little mention of AI in MAHA’s comprehensive report on child health last year.)

But even with federal and state action, the reality is that much of the AI world will be navigated by parents ourselves. While there are steps that could limit children’s exposure to AI at younger ages, avoidance alone is not the answer. We are only at the beginning, and already AI technology is unavoidable. It’s in our computers, homes, schools, toys, and work and the AI age is only just beginning.

More scaffolding is required. The deep work will fall to parents. Parents have always needed to raise children with strong spines, thick skins and moral virtue. The struggles of each era change, but that doesn’t. We will now need to raise children who have the sense of purpose, critical-thinking abilities and relational know-how to live with this new and already ubiquitous technology — with its great promise and dangers.

It’s a brave new world out there, indeed.

_____

This column reflects the personal views of the author and does not necessarily reflect the opinion of the editorial board or Bloomberg LP and its owners.

Abby McCloskey is a columnist, podcast host, and consultant. She directed domestic policy on two presidential campaigns and was director of economic policy at the American Enterprise Institute.

_____

©2026 Bloomberg L.P. Visit bloomberg.com/opinion. Distributed by Tribune Content Agency, LLC.

Comments